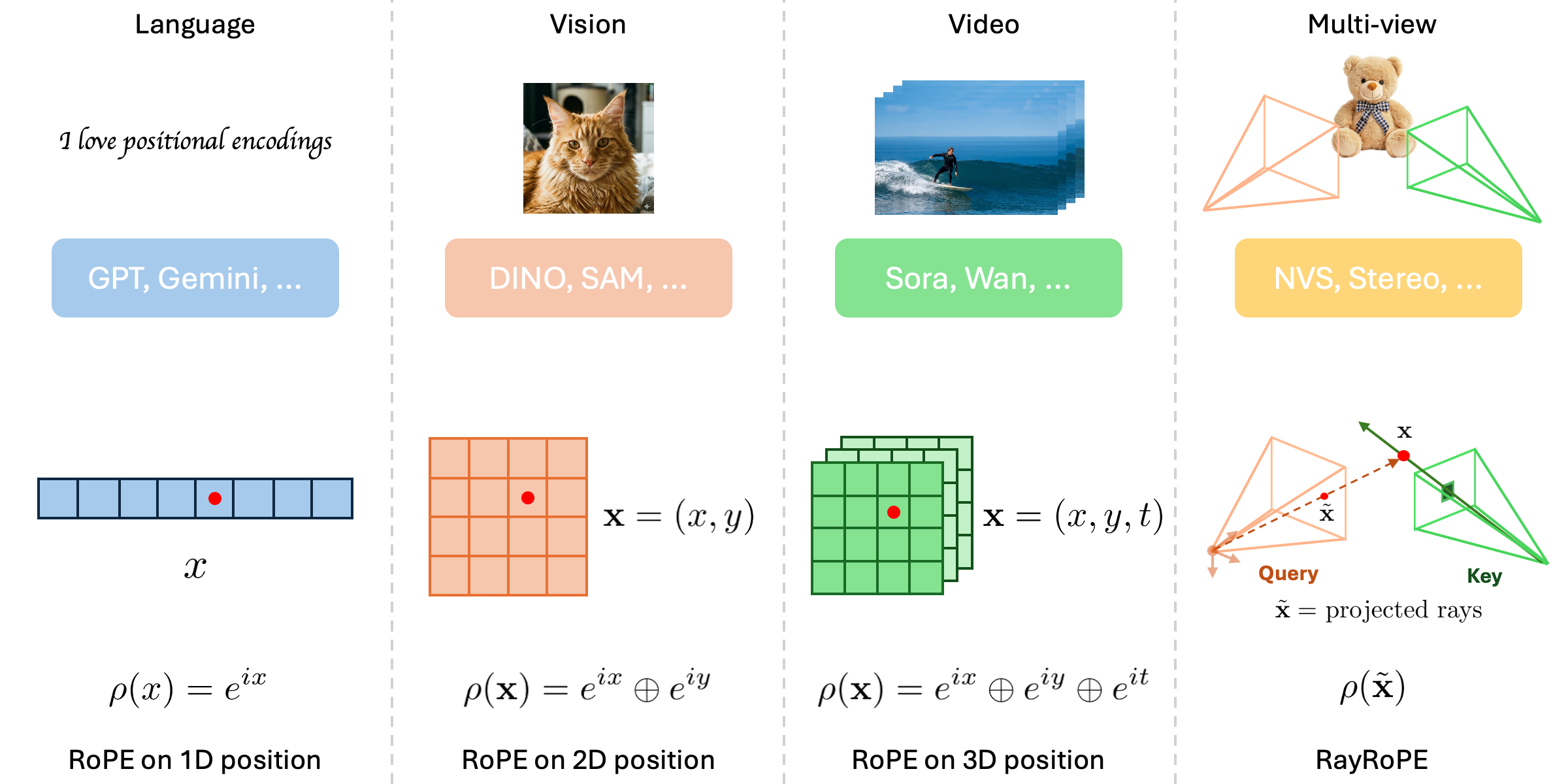

We study positional encodings for multi-view transformers that process tokens from a set of posed input images, and seek a mechanism that encodes patches uniquely, allows SE(3)-invariant attention with multi-frequency similarity, and can be adaptive to the geometry of the underlying scene. We find that prior (absolute or relative) encoding schemes for multi-view attention do not meet the above desiderata, and present RayRoPE to address this gap. RayRoPE represents patch positions based on associated rays but leverages a predicted point along the ray instead of the direction for a geometry-aware encoding. To achieve SE(3) invariance, RayRoPE computes query-frame projective coordinates for computing multi-frequency similarity. Lastly, as the 'predicted' 3D point along a ray may not be precise, RayRoPE presents a mechanism to analytically compute the expected position encoding under uncertainty. We validate RayRoPE on the tasks of novel-view synthesis and stereo depth estimation and show that it consistently improves over alternate position encoding schemes (e.g. 15% relative improvement on LPIPS in Co3D). We also show that RayRoPE can seamlessly incorporate RGB-D input, resulting in even larger gains over alternatives that cannot positionally encode this information.

We live in a 3D world, and the positional encoding of transformers should be 3D-aware as well. In this project, we propose RayRoPE, a novel relative positional encoding mechanism designed for multi-view attention.

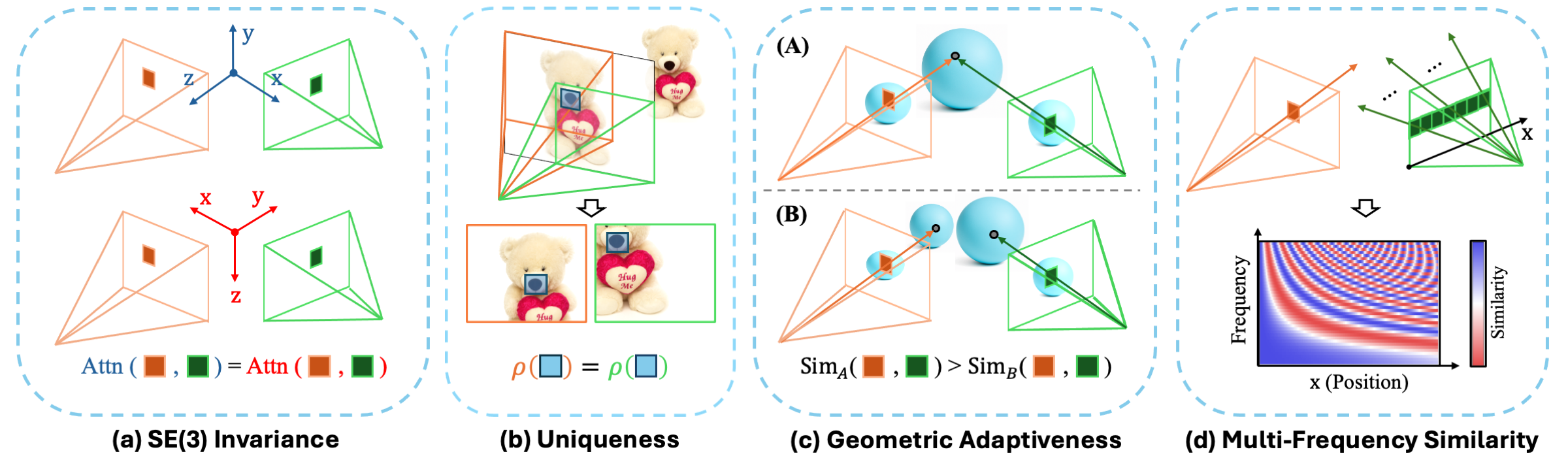

We argue that an ideal positional encoding for multi-view attention should satisfy four desirable properties illustrated below:

| Method | SE(3) Invariance | Uniqueness | Geometric Adaptiveness | Multi-frequency |

|---|---|---|---|---|

| Plücker Raymap | ✗ | ✓ | ✗ | ✗ |

| PRoPE | ✓ | ✗ | ✗ | ✗ (only for patch indices) |

| RayRoPE (Ours) | ✓ | ✓ | ✓ | ✓ |

We train the LVSM model with different positional encodings and compare their results below.

Left Video:

Right Video:

Select Scene (Input Views):

We evaluate the stereo depth estimation performance with the Unimatch model. The 3D point clouds below show the predicted depth for each method:

Ground Truth

UniMatch

PRoPE

RayRoPE (Ours)

Select Scene (Input Views):

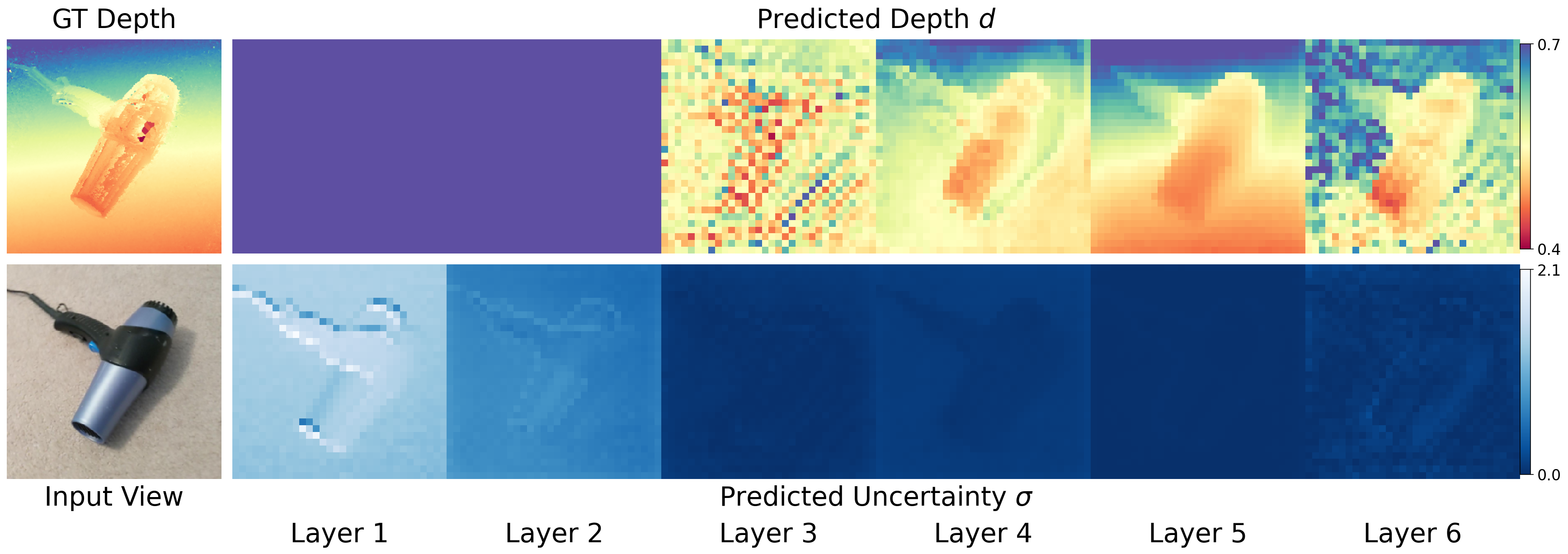

RayRoPE predicts depth and uncertainties which are used to compute the positional encodings. Even without depth supervision during training, resonable depth predictions emerge, especially in the later layers. Depth prediction accuracy and the predicted uncertainties are inversely correlated.

Select Scene: